Previous post was from the summer of 2012, when Resolve had gotten the ACES support, Nuke barely supported it through OCIO and most people had not heard, much less used the ACES system/logic (me included). I tried to understand the logic behind the whole ACES workflow and kind of got it not too wrong. I hope that in this post I can add some more bits and pieces to the general concept and in the next posts dig deeper into the gritty guts of ACES based image manipulation.

First off, the official documentation:

Official ACES web page

ACES documentation

ACESCentral.com

https://github.com/ampas/aces-dev

Documentation page contains specifications of different ACES components in pdf format. It is a good read but can be overwhelmingly technical for first insight. ACES Central is a user forum with not too many posts yet, but can be a valuable source of information. Github page is ACES source so to speak, the color modification scripts in the form of CTL (Color Transform Language) that are used either directly, or more commonly through LUTs, in different end software that implement the ACES system.

ACES in a nutshell

From the distance, ACES system is built from an new color encoding system, color pipeline (how color data moves from aquisition to presentation and archival), relevant metadata and data containers (file formats) that are necessary to hold the data. The most important piece is the new color encoding system, that includes new gamut descriptions, transfer functions etc. Color pipeline deals with how to handle non-ACES data and what to do with ACES data once we have it in our hands.

ACES color spaces/gamuts/encodings

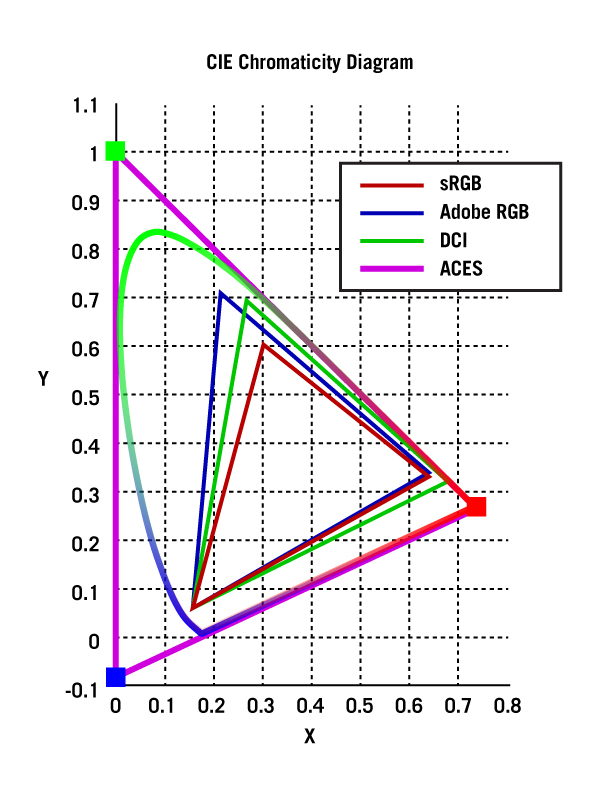

The main building block of ACES is the new color gamut called ACES. It can also be called ACES2065-1 or sometimes SMPTE ST 2065-1.ACES is based on additive RGB color model, it is not directly related to CIE XYZ. The primary coordinates are chosen so that all the visible colors that are contained in the spectral locus (horseshoe shape) are encompassed within the ACES gamut triangle.

Image from fxguide.com

There are some arguments over whether it was wise to create a new gamut instead of handling XYZ as an RGB space. XYZ also covers all visible colors and is used as an intermediate space in almost all color transforms anyway. Yes, it is possible to calculate xy coords for XYZ RGB primaries, we simply handle XYZ components directly as RGB components (which they correlate pretty nicely with). The xy coords for the primaries would simply be R = 0.0, 1.0; G = 1.0, 0.0 and B = 0.0, 0.0 which creates a nice triangle with its diagonal touching the top of spectral locus. Visually it is bigger and seems to waste more space on imaginary colors, but in reality the volume of XYZ unit cube is not less efficient than volume of ACES gamut in containing the visible colors.

The property of containing all visible colors is necessary to preserve all possible color data whatever the aquisition format or quality. It is mainly useful for archival as in the future we might use more capable displays that allow to show some of the more saturated colors that are not visible with todays common technology. Another part of it is to offer a working space that is wide enough to not accidentally clip some colors through some obscure color operation. It is, still, possible to generate colors outside of ACES gamut and, to intrigue, even digital cameras can produce colors that are outside the ACES gamut (how it can happen is maybe a topic for another post).

ACES is not the first gamut to contain imaginary colors (outside spectral locus) and will not be the last. The simple fact that triangle can only cover a convex shape when its vertices are outside the shape itself makes it impossible to create a tri-component color system that can describe all visible colors (with positive values) where the primaries are inside spectral locus. It can be done if we allow negative values, but it messes up most color operations in rendering and compositing, so no-one really wants to do that. What ACES does to rendering is a topic for another post :)

ACEScg, ACEScc, ACEScct, ACESproxy

In addition to ACES gamut there are additional gamuts (fortunately one to be exact) that have red and blue primaries inside spectral locus and green a tad outside. As gamut is described using its primaries and white point, ACES gamut is marked as AP0 or ACES Primaries 0. The other gamut used is called ACES Primaries 1 or AP1. The gamut coords can be found in the specs, what is important is that AP1 is smaller, contains mostly real colors and does not allow some very saturated green and cyan colors. Which means that gamut clipping can occur when moving from AP0 to AP1.

AP1 gamut is used in encoding schemes named ACEScg - cg stands for Computer Graphics (rendering, compositing etc), ACEScc - cc is for Color Correction (grading), ACEScct - cc for colorcorrection and t for toe (has a toe part in curve, see section on transfer functions) and ACESproxy which is meant for on-set monitoring (one should not save files in ACESproxy, it is for live use only).

The main difference between cg, cc, cct and proxy variants is the transfer function (non-linear encoding of values) and use cases. Gamut wise they are identical.

Transfer functions

Transfer function is a function that takes a range of input values, for example 0.0 - 1.0, and transforms them into some other range. The destination range can be non-linear, it can have toes and shoulders, it can be lifted, it can be compressed, it can be clamped. The main purposes for using a transfer functions are:

- fiddle the data so that limited storage space (bit depth) is more efficiently used (in raster files, in digital video signal)

- bake in a display curve that is necessary to produce correct tone scales on some device (rec709 gamma correction curves for ref monitor)

- fit higher dynamic range into smaller range (HDR compression, shaper LUTs etc)

- modify color data so that it is more suitable for some specific operation (grading, keying, filtering etc)

to be continued...